Summary

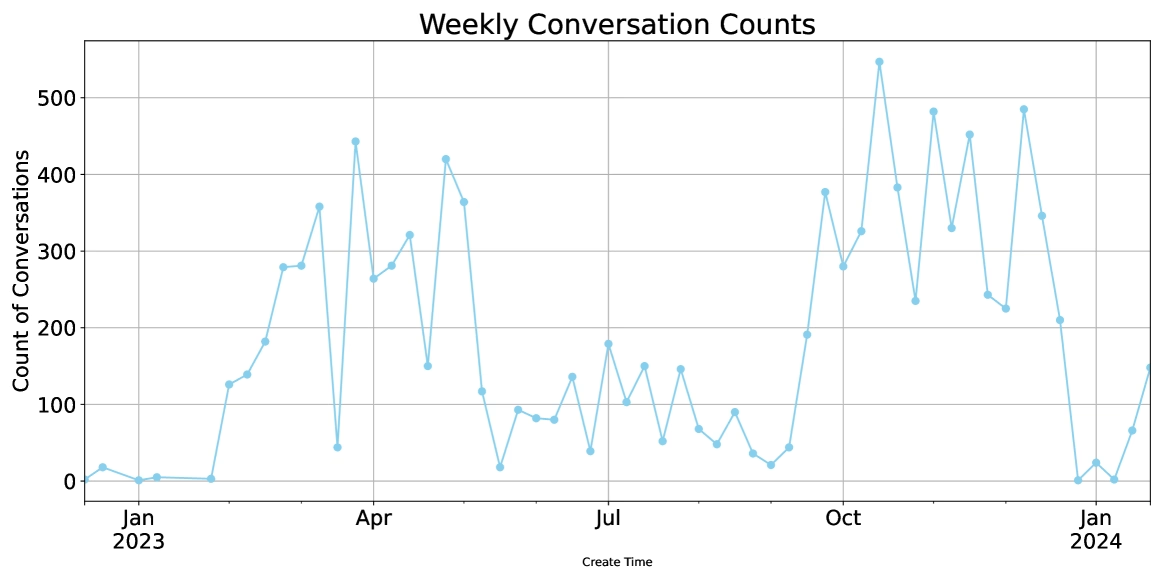

Below we provide a lay summary of Ammari et al’s (Rutgers) longitudinal research on how students interact with ChatGPT. The study was conducted from December 2022 to January 2024 with results published in May 2025.

A recent study analyzing how undergraduate students use ChatGPT provides a detailed picture of user behavior that moves beyond self-reported surveys to examine naturalistic interaction logs. The research, titled “How Students (Really) Use ChatGPT: Uncovering Experiences Among Undergraduate Students“, sought to understand the day-to-day ways students integrate the AI tool into their academic routines by observing their actual, self-directed usage patterns.

The findings reveal a complex relationship where students treat the AI as both a tool and a learning partner, actively working to manage its limitations. The analysis identified five dominant categories of use that frame the student-AI interaction.

What are the Five Dominant Categories of use?

The study systematically categorized thousands of interactions, identifying five primary ways students engage with the platform:

- Information Seeking: Students used the tool to ask questions on a wide range of subjects, including academic, medical, and cultural topics. This behavior extends beyond simple fact-checking into more complex information discovery.

- Content Generation: A significant use case was the generation of original content. This included drafting academic and professional documents, using the AI as a co-creator in the writing process.

- Language Refinement: Users frequently employed ChatGPT as an advanced editing tool to refine their own writing, improving clarity, style, and grammar.

- Meta-Cognitive Engagement: The research observed students using the AI to reflect on their own learning processes. This “meta-cognitive” interaction involved using the chatbot to co-regulate their progress and manage their learning journey.

- Conversational Repair: Users seem to persevere when an answer engine produces an error or misunderstands a prompt. Instead, they actively engage in “conversational repair,” rephrasing questions, providing clarification and context, and adapting their prompts to guide the AI toward the desired outcome.

Are there predictors of long-term engagement?

The study also conducted behavioral modeling to determine which usage patterns predicted sustained, long-term engagement with the platform. The analysis found that users were most likely to continue using ChatGPT when they were engaged in structured, goal-driven tasks. Specific examples of these “sticky” tasks include writing code, solving multiple-choice questions, and preparing job applications. This suggests that the tool’s utility in accomplishing specific, tangible goals is a stronger driver of retention than casual, exploratory use.

Note: The authors grounded their research with two separate frameworks: Self-Directed Learning and Uses and Gratifications Theory. The latter was particularly interesting to me because the initial theory was established nearly 85 years ago with radio listeners! It was then broken down into five main assumptions in the 70’s that I believe are still relevant over five decades later.

The AI as a “Socio-Technical Actor”

A central conclusion of the research is that students interact with ChatGPT as more than just a passive cognitive tool. The paper frames ChatGPT as a “socio-technical actor that co-constructs the learning experience with the student”. The observed behaviors, particularly the persistence shown in conversational repair and the use of meta-cognitive reflection, indicate that students are forming a dynamic, collaborative relationship with the technology. They actively manage its affordances and limitations, adapting their own strategies to work with the system effectively.

The study reveals that users are not passive recipients of information but are instead active, adaptive partners in a dialogue, engaging in a range of sophisticated behaviors to achieve their goals.

What are the implications for AI search?

The behaviors identified in the study signal a significant departure from the interaction model that has defined the digital age: the search engine. This shift has profound implications for how users develop loyalty and trust with online platforms, explaining the unprecedented speed of generative AI adoption and a disproportionate conversion rate for purchasing products and services (4.4x, relative to traditional search).

Traditional search is an inherently one-sided, “flat” interaction. The user submits a query, and the platform returns a ranked list of potential answers. The dialogue begins and ends with this single transaction. Loyalty in this paradigm is built on efficiency and the perceived authority of the algorithm. Some would argue it was simply built from a lack of options. Trust is placed in Google’s ability to sort the web’s information correctly and deliver the most relevant link. The relationship is with a tool, not a partner.

Ammari’s findings illustrate a starkly different, more collaborative model. The prevalence of “conversational repair” is the most telling indicator of this change. Unlike a failed search query, which often leads to abandonment or a completely new query, users of generative AI invest their own effort to correct the system’s course. They rephrase, clarify, and guide the AI, treating the interaction as a dialogue to be improved rather than a transaction that has failed. This willingness to co-create the outcome signifies a deeper level of engagement and a new form of trust—not in the AI’s infallibility, but in its capacity to be guided toward a “correct” answer.

This collaborative dynamic is the bedrock of a new, more resilient form of platform loyalty. The research shows that long-term engagement is anchored by “goal-driven tasks” like writing code or preparing a job application. When a platform becomes integral to achieving a tangible outcome, it moves from being a utility to being a partner. This task-oriented loyalty is far stickier than the transactional loyalty of traditional search. The platform is no longer just a source of information; it is a collaborator in the user’s personal and professional workflows.

This sense of partnership or collaboration could be an explanation for the immense and rapid adoption of generative AI. The relationship between user and platform is becoming… well, relational. Trust is built on the platform’s ability to provide incrementally better responses based on the user-provided context. This trust seems to build into anthropomorphism, the act of attributing human characteristics to animals or objects.

About the Author: Adam Malamis

Adam Malamis is Head of Product at Gander, where he leads development of the company's AI analytics platform for tracking brand visibility across generative engines, like ChaptGPT, Gemini, and Perplexity.

With over 20 years designing digital products for regulated industries including healthcare and finance, he brings a focus on information accuracy and user-centered design to the emerging field of Generative Engine Optimization (GEO). Adam holds certifications in accessibility (CPACC) and UX management from Nielsen Norman Group. When he's not analyzing AI search patterns, he's usually experimenting in the kitchen, in the garden, or exploring landscapes with his camera.